Artificial Intelligence risk management

As organisations continue to embrace the transformative power of artificial intelligence (AI), it is crucial to address associated risks during development, deployment, and use. The primary risk management goal is to enhance the ability of organisations to incorporate trustworthiness considerations into AI systems. By doing so, organisations can foster responsible AI development and mitigate potential harms.

Effective AI risk management navigates uncertainty.

An AI risk management framework (RMF) offers a comprehensive approach to managing these risks, promoting trustworthiness, and ensuring responsible AI practices. The framework provides guidelines for identifying, assessing, and mitigating risks associated with AI systems. It encourages a proactive approach to risk management throughout the AI lifecycle.

Osmond helps you navigate the complex landscape of AI risks by implementation such an AI RMF and governance.

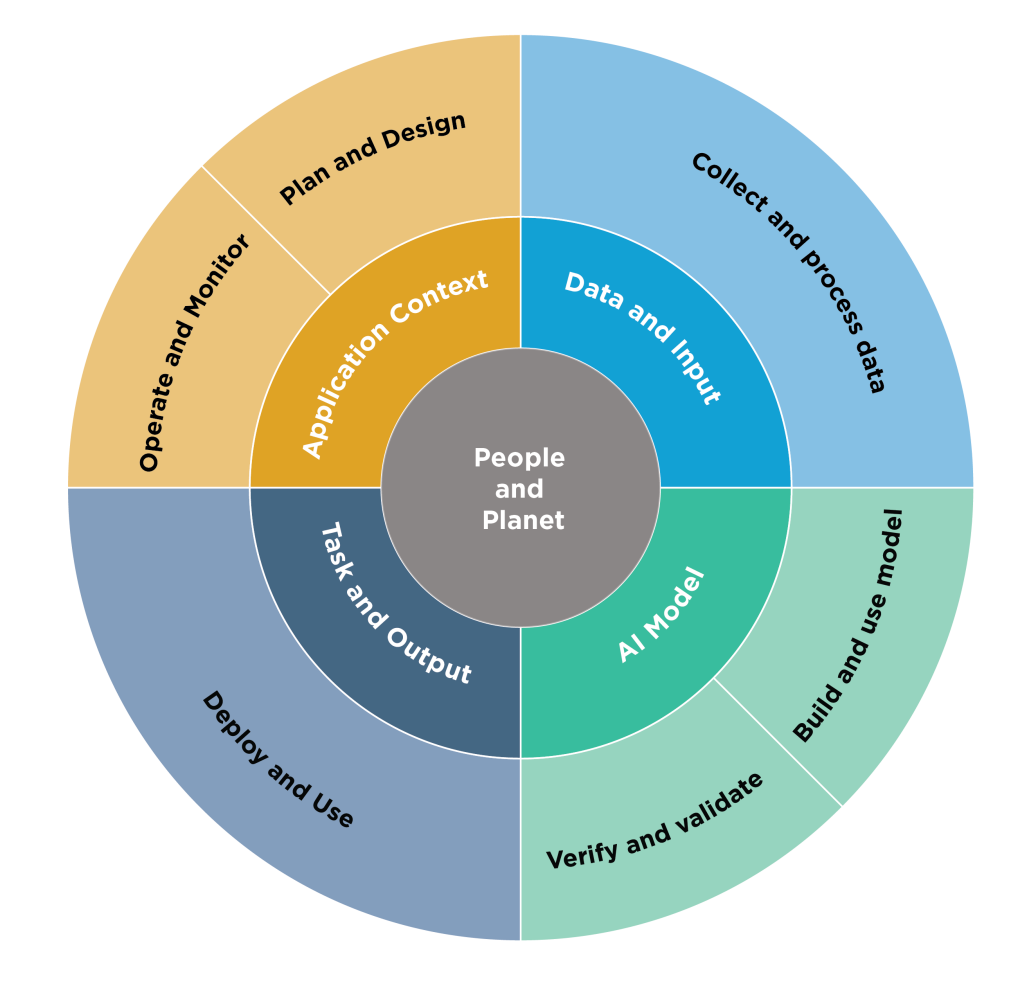

Lifecycle and Key Dimensions of an AI System. (Source: NIST)

We’re here to help!

Contact Us

Thank you for contacting us.

We will get back to you as soon as possible.

Oops, there was an error sending your message.

Please try again later.

Copyright Osmond GmbH, 2025 / Privacy